Chaos Game Representation

Contents

Chaos Game Representation#

# run this code block before executing the rest of the notebook

%matplotlib inline

import math

import numpy as np

import matplotlib.pyplot as plt

from mpl_toolkits import mplot3d

from mpl_toolkits.mplot3d import Axes3D

from matplotlib.colors import ListedColormap

import random

import csv

import sympy

from sympy.ntheory.continued_fraction import continued_fraction_iterator

import skimage.io

from skimage.metrics import structural_similarity as ssim

import pickle

import seaborn as sns

import pandas as pd

from sklearn import manifold, decomposition

import multiprocessing as mp

Introduction#

A heavy warning used to be given [by lecturers] that pictures are not rigorous; this has never had its bluff called and has permanently frightened its victims into playing for safety. Some pictures, of course, are not rigorous, but I should say most are (and I use them whenever possible myself).

—J.E. Littlewood, [Littlewood, 1986](p. 53)

Finding hidden patterns in long sequences can be both difficult and valuable. Representing these sequences in a visual way can often help. The human brain is very adept at detecting patterns visually. Indeed, sometimes too adept: when humans detect spurious patterns that aren’t really there, it’s called pareidolia. That human tendency is what originally provoked the “heavy warning” against pictures that Littlewood complained about above. Nonetheless, pictures can help a lot, to generate conjectures, and sometimes even indicate a path towards a proof, even if sometimes “only” an experimental proof using comparison with data.

The so-called chaos game representation (CGR) of a sequence of integers is a particularly useful technique for pattern detection, which visualizes a one-dimensional sequence in a two-dimensional space. The CGR is presented as a scatter plot, most frequently on a square in which each corner represents an element that appears in the sequence. The results of these CGRs can look like fractals, but even so can be visually recognizable and distinguishable from each other. Interestingly, the distinguishing characteristics can sometimes be made quantitative, with a good notion of “distance between images.” This is an indirect way of constructing an equivalent notion of “distance between sequences,” but it turns out to be simpler to think about.

There is an excellent video by Robin Truax on CGR and Sierpinksi’s triangle; worth watching now, or you can save it for later. Now back to our unit.

Many applications, such as analysis of DNA sequences [Goldman, 1993, Jeffrey, 1990, Jeffrey, 1992, Karamichalis et al., 2015] and protein structure [Basu et al., 1997, Fiser et al., 1994], have shown the usefulness of CGR and the notion of “distance between images”; we will discuss these applications briefly in CGR of Biological Sequences. Before that, in Creating Fractals using Iterated Function Systems (IFS), we will look at a random iteration algorithm for creating fractals. In CGR of Mathematical Sequences, we will apply CGR to simple abstract mathematical sequences, including the digits of \(\pi\) and the partial quotients of continued fractions. Additionally, we will look at certain “distances between images” that are produced. Finally, we will explain how some of the patterns arise, and what these depictions can indicate to us, or sometimes even tell us rigorously, about the sequences.

One important pedagogical purpose of this module is to provoke a discussion about randomness, or what it means to be random. In this paper, we at first use the word “random” very loosely, on purpose. Indeed, we will use several words loosely, such as “fractal” and “attractor” or “attracting set”. We promise to provide references at the end that will provide more careful definitions, and tighten up the discussion.

What is probability? I asked myself this question many years ago, and found that various authors gave different answers. I found that there were several main schools of thought with many variations.

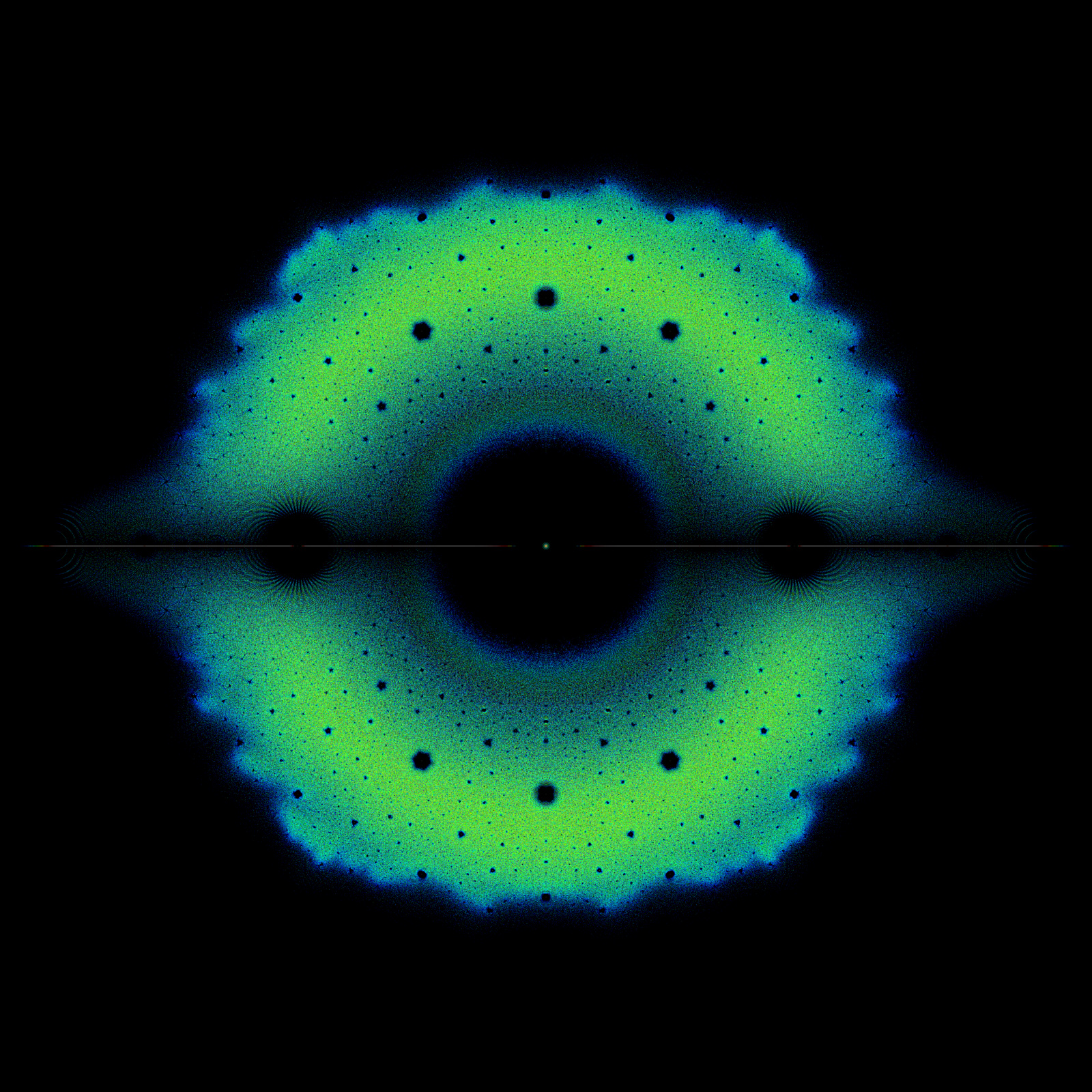

Fig. 8 DNA of human beta globin region on chromosome 11 (HUMHBB)—73308 bps#

A Note to the Student/Reader#

This is the most complicated unit in this OER, both for concepts and for code. After having been exposed to the prior material, we think that you can handle this now, even though it is more complicated. Another complication is that there are genuine applications discussed here, with real data (unlike in any of the previous chapters). The newest parts of the unit include the necessity for handling data. In some sense that code is not very mathematical, being data transfer rather than data transformation; but it is necessary to learn, if one is going to be working with real data. Indeed, that may be motivating for some of you, with the buzzword “Data Science” floating in the back of your mind. Yes, this unit can be considered as an introduction to Data Science.

This entails yet another difficulty, which is that all of the data-handling packages listed above have to be installed correctly, and this is not always straightforward.

As far as the math goes, we also spend quite a bit of time on the notion of randomness. Again, this is largely new to this unit.

A Note to the Instructor#

A version of this chapter will be published in SIAM Review in its Education section. We are grateful that the SIAM publishers (of the Journal and of SIAM Books) have agreed to this. There are some differences to the paper version, of course. The paper version was submitted two years ago, and accepted eight months ago, and this unit has been edited a few times since then. The main difference is that we have tried to make this unit address the student/reader more directly, while the paper version was addressed more to the instructor.

One of the main uses of this unit will be to provoke discussion on what it means to be random. How you choose to handle this with your class is of course up to you, but we see several opportunities, chief among them to get the students to ask their own questions about randomness.

This module can be used to teach students about a useful visualization of sequences, in which case the emphasis from the instructor could be on interpreting the results, perhaps by using structural similarity or distance maps. The module can also be used to teach elementary programming techniques, in which case the instructor can emphasize programming tools, correctness, efficiency, style, or analysis.

Using elementary sequences of integers makes the module accessible even to first-year students, avoiding difficult biological details: but even so, students are exposed to the deep concepts of pattern and randomness more or less straight away.

Another thing is that much of this material was new to us when Lila Kari introduced the class to the notions, at a guest lecture. We learned it at much the same time as the students did. This worked well, and so we recommend that you let the students lead some of the discussions. If you are in a similar situation to the one we were in, we think that you will enjoy this. The students might be a little anxious about leading, but it can be made to work. Of course you will want to read ahead and read some enrichment material; there is a lot.

Creating Fractals using Iterated Function Systems (IFS)#

One random iteration algorithm for creating pictures of fractals uses the fixed attracting set of an iterated function system (IFS). Before defining IFS, we will first define some necessary preliminaries.

Affine Transformation in the Euclidean Plane#

We write a two dimensional affine transformation in the Euclidean plane \(w: \mathbb{R}^{2} \to \mathbb{R}^{2}\) in the form

where \(a\), \(b\), \(c\), \(d\), \(e\), and \(f\) are real numbers [Barnsley, 2014]. We will use the following more compact notation:

The matrix \(A\) is a \(2 \times 2\) real matrix and can always be written in the form

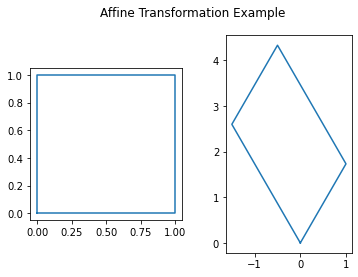

where \(\left(r_{1}, \theta_{1}\right)\) are the polar coordinates of the point \(\left(a, c\right)\) and \(\left(r_{2}, \left(\theta_{2} + \frac{\pi}{2}\right)\right)\) are the polar coordinates of the point \(\left(b, d\right)\). An example of a linear transformation

in \(\mathbb{R}^{2}\) is shown in in the following figure. We can see from this figure that the original shape remains as a parallelogram, but now has changed in size and in rotation. This is what we mean by linear transformation.

p = np.array([[0, 0, 1, 1, 0], [0, 1, 1, 0, 0]])

t = np.array([[2*math.cos(math.pi/3), -3*math.sin(math.pi/6)], [2*math.sin(math.pi/3), 3*math.cos(math.pi/6)]])

A = np.matmul(t, p)

fig, (ax1, ax2) = plt.subplots(1, 2)

fig.suptitle('Affine Transformation Example')

ax1.plot(p[0, :], p[1, :])

ax1.set_aspect('equal', 'box')

ax2.plot(A[0, :], A[1, :])

ax2.set_aspect('equal', 'box')

plt.show()

Iterated Function System#

An iterated function system (IFS)1 is a finite set of contraction mappings on a metric space. That is, each mapping shrinks distances between points, so that the distance between \(f(x)\) and \(f(y)\) is smaller than the distance between \(x\) and \(y\). These can be used to create pictures of fractals. These pictures essentially show what is called the attractor of the IFS. We will be defining what an attractor is in Chaos Game. In this paper, we will only be looking at the form where each contraction mapping is an affine transformation on the metric space \(\mathbb{R}^2\).

We will illustrate the algorithms for an IFS as an example. Consider the following maps:

each with the probability factor of \(\frac{1}{3}\), representing that each of these three options is to be chosen in an equally-likely way. These values can also be displayed as a table, where the order of the coefficients \(a\) through \(f\) corresponds to the order presented in Equation (71) and \(p\) represents the probability factor. The corresponding table for Equation (72) can be found in Table 1.

w |

a |

b |

c |

d |

e |

f |

p |

|---|---|---|---|---|---|---|---|

1 |

\(\frac{1}{2}\) |

\(0\) |

\(0\) |

\(\frac{1}{2}\) |

\(0\) |

\(0\) |

\(\frac{1}{3}\) |

2 |

\(\frac{1}{2}\) |

\(0\) |

\(0\) |

\(\frac{1}{2}\) |

\(0\) |

\(\frac{1}{2}\) |

\(\frac{1}{3}\) |

3 |

\(\frac{1}{2}\) |

\(0\) |

\(0\) |

\(\frac{1}{2}\) |

\(\frac{1}{2}\) |

\(\frac{1}{2}\) |

\(\frac{1}{3}\) |

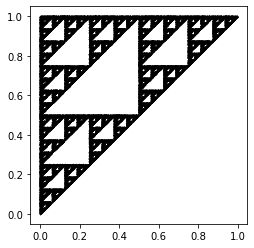

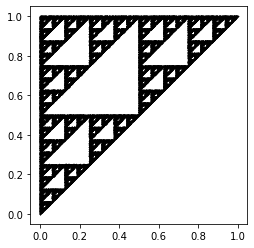

To create a fractal picture using IFS, we first choose a starting point \((x_0, y_0)\). We then randomly choose a map from our IFS and evaluate it at our starting point \((x_0, y_0)\) to get our next point \((x_1, y_1)\). We do this again many times (determined by the user) until we see a pattern. The Python code shown below computes and plots 50000 points corresponding to the IFS from Table 1. To choose which map to use for each iteration, we used a uniform random number generator random.randint to choose a number between (and including) 0 and 2. Each value corresponds to a map in our IFS. It is clear that the resulting figure shows a fractal, which appears to be2 the Sierpinski triangle [Barnsley, 2014, Feldman, 2012], where its three vertices are located at \((0, 0)\), \((0, 1)\) and \((1, 1)\).

n = 50000

pts = np.zeros((2, n))

rseq = [random.randint(0, 2) for x in range(n-1)]

for i in range(1, n):

if rseq[i-1] == 0:

pts[:, i] = np.array([[0.5, 0], [0, 0.5]]).dot(pts[:, i-1]) + np.array([0, 0])

elif rseq[i-1] == 1:

pts[:, i] = np.array([[0.5, 0], [0, 0.5]]).dot(pts[:, i-1]) + np.array([0, 0.5])

else:

pts[:, i] = np.array([[0.5, 0], [0, 0.5]]).dot(pts[:, i-1]) + np.array([0.5, 0.5])

plt.plot(pts[0, :], pts[1, :], 'k.', markersize=1)

plt.gca().set_aspect('equal', adjustable='box')

plt.show()

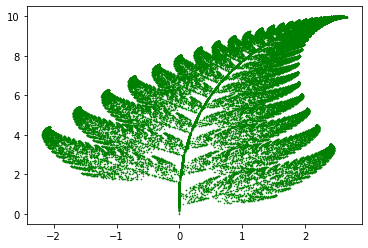

Iterated function systems can be quite versatile; a set of several attractors can be used to create different shapes and even can resemble real-life objects. An example of this is Barnsley’s fern, which resembles the Black Spleenwort [Barnsley, 2014]. The map is displayed in the following table:

w |

a |

b |

c |

d |

e |

f |

p |

|---|---|---|---|---|---|---|---|

1 |

0.00 |

0.00 |

0.00 |

0.16 |

0.00 |

0.00 |

0.01 |

2 |

0.85 |

0.04 |

-0.04 |

0.85 |

0.00 |

1.60 |

0.85 |

3 |

0.20 |

-0.26 |

0.23 |

0.22 |

0.00 |

1.60 |

0.07 |

4 |

-0.15 |

0.28 |

0.26 |

0.24 |

0.00 |

0.44 |

0.07 |

We can use Python to produce the plot of the IFS given above.

n = 50000

fern = np.zeros((2, n))

for i in range(1, n):

r = random.random()

if r <= 0.01:

fern[:, i] = np.array([[0, 0], [0, 0.16]]).dot(fern[:, i-1])

elif r <= 0.86:

fern[:, i] = np.array([[0.85, 0.04], [-0.04, 0.85]]).dot(fern[:, i-1]) + np.array([0, 1.6])

elif r <= 0.93:

fern[:, i] = np.array([[0.2, -0.26], [0.23, 0.22]]).dot(fern[:, i-1]) + np.array([0, 1.6])

else:

fern[:, i] = np.array([[-0.15, 0.28], [0.26, 0.24]]).dot(fern[:, i-1]) + np.array([0, 0.44])

plt.plot(fern[0, :], fern[1, :], 'g.', markersize=1)

plt.show()

This image resembles a “black spleenwort” (genus Asplenium), which you might want to look up in order to compare the mathematical image above to a real plant.

CGR Activity 1

Playing with the coefficients of the transformation functions will create mutant fern varieties. One example of a mutant has the following IFS

w |

a |

b |

c |

d |

e |

f |

p |

|---|---|---|---|---|---|---|---|

1 |

0.00 |

0.00 |

0.00 |

0.25 |

0.00 |

-0.4 |

0.02 |

2 |

0.95 |

0.005 |

-0.005 |

0.93 |

-0.002 |

0.5 |

0.84 |

3 |

0.035 |

-0.2 |

0.16 |

0.04 |

-0.09 |

0.02 |

0.07 |

4 |

-0.04 |

0.2 |

0.16 |

0.04 |

0.083 |

0.12 |

0.07 |

a) Modify the given Barnsley fern code to create the mutant given by the IFS above. What are the differences between the original Barnsley fern and the mutant? [What happened when we did this]

b) Create your own Barnsley fern mutant by changing the coefficients. [What happened when we did this]

CGR Activity 2

Activity 2: Paul Bourke’s website provides several images produced by IFS. Replicate some of these examples using Python. [What happened when we did this]

Chaos Game#

CGR is based on a technique from chaotic dynamics called the “chaos game”3, popularized by Barnsley [Barnsley, 2014] in 1988. The chaos game is an algorithm which allows one to produce pictures of fractal structures [Jeffrey, 1990]. In its simplest form, this can be demonstrated with a piece of paper and pencil using the following steps (adapted from [Jeffrey, 1990]):

On a piece of paper, draw three points spaced out across the page; they do not necessarily need to be spaced out evenly to form an equilateral triangle though they might be. These will determine the “gameboard”, where the picture will ultimately lie.

Label one of the points with “1, 2”, the other point with “3, 4”, and the last point with “5, 6”.

Draw a point anywhere on the page: this will be the starting point.

Roll a six-sided die. Draw an imaginary line from the current dot towards to the point with the corresponding number, and put a dot half way on the line. This becomes the new “current dot”.

Repeat step 4 (until you get bored).

Obviously, one would not want to plot a large number of points by hand4; instead, this can be done by computer; the code is shown below. For this algorithm, we assigned \((0, 0)\), \((0, 1)\) and \((1, 1)\) as the vertices of the triangle; they each are labelled with the value \(0\), \(1\), and \(2\), respectively. Instead of using a die to decide which vertex to choose, similarly to the above code, we use the built-in function random.randint, which picks from the set \(\{0, 1, 2\}\).

To calculate the new point, we take the average between the previous point and the corresponding vertex. For example, if the first random number generated was \(2\), then we would take the average between our initial point (0, 0) and the vertex that corresponds to \(2\), which is in our case is \((1, 1)\). Our new point would be \((0.5, 0.5)\). We then would repeat this process until one decides to stop.

import numpy as np

import matplotlib.pyplot as plt

import random

n = 50000

pts = np.zeros((2, n))

rseq = [random.randint(0, 2) for x in range(n-1)]

for i in range(1, n):

if rseq[i-1] == 0:

pts[:, i] = 0.5*(pts[:, i-1] + np.array([0, 0]))

elif rseq[i-1] == 1:

pts[:, i] = 0.5*(pts[:, i-1] + np.array([0, 1]))

else:

pts[:, i] = 0.5*(pts[:, i-1] + np.array([1, 1]))

plt.plot(pts[0, :], pts[1, :], 'k.', markersize=1)

plt.gca().set_aspect('equal', adjustable='box')

plt.show()

One would expect that the outcome above would show that the dots would appear randomly across the gameboard; however, this is not the case: the figure shows the result of the chaos game if the game was played 50000 times. We can see that this figure shows a fractal-like pattern (such as one of a Sierpinski triangle), and looks almost (if not) identical to the figure which resulted from the IFS.

It is quite interesting that a sequence of random numbers could produce such a distinct pattern. Indeed, some bad pseudorandom number generators were identified as being bad precisely because they failed a similar-in-spirit visualization test [Knuth, 1997]. We will discuss this issue further in Random vs Pseudorandom. However, what has happened here is that the randomness of the sequence has translated into a uniform distribution on the fractal. So, really, here the gameboard is the fractal! We will return to this point in the next section.

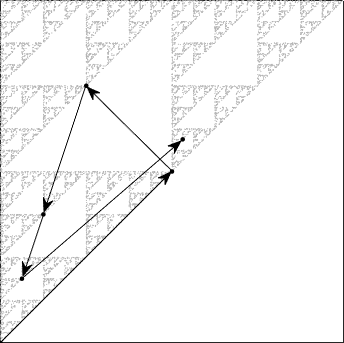

Fig. 9 shows an example of the first five steps of the chaos game (overlaid on top of a CGR with 10000 points), which corresponds to the following values that were randomly generated: 2, 1, 0, 0, 2. Displaying the CGR in this manner can give us some insight as to why this particular chaos game with three vertices gives us such a striking fractal: we can see from the figure that each of the points from the first five steps is located at a vertex (in this case the right-angled one) of one of the many triangles within the fractal. A careful observer would notice that the triangle at which the point is located becomes smaller each iteration. This implies that within the next few iterations, the triangle associated with the computed point would be too small to be seen in this illustration. [Note: if we zoom into that microscopic level, the dots would look random. Indeed, the random dots are ultimately dense on the Sierpinski triangle.] This sequence of points generated by the chaos game is called the orbit of the seed and it is attracted to the Sierpinski triangle. For another description of why this chaos game creates the Sierpinski triangle, see [Fisher et al., 2012].

Fig. 9 First 5 steps of chaos game with three vertices. In this example, the first 5 random integers generated are 2, 1, 0, 0, 2.#

Generalization of CGR#

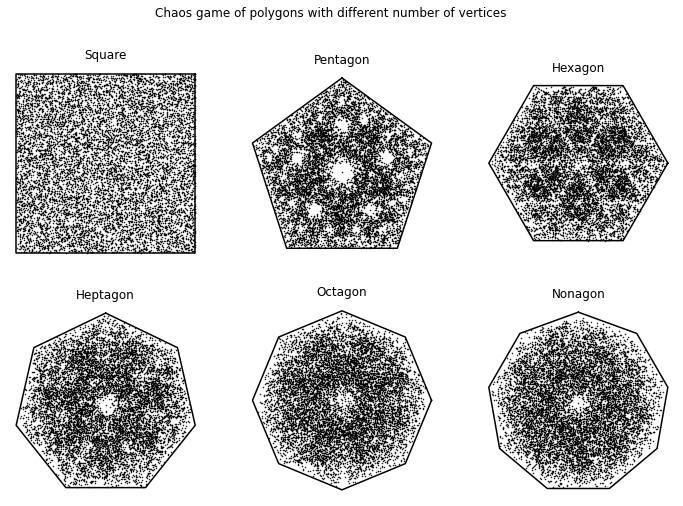

We have already seen that a fractal pattern can appear when we follow the chaos game described above for a polygon with three vertices (triangle) using a random number generator, but we are not limited to only this. There are many variations for CGR, and some are more useful than others. In this section, we will look into what patterns arise when we perform CGR on different polygons, changing the placement of the \(i\)-th point relative to the \((i-1)\)-th and its corresponding vertex, and explore which of these could potentially be useful for some applications presented in CGR of Biological Sequences.

Different Polygons#

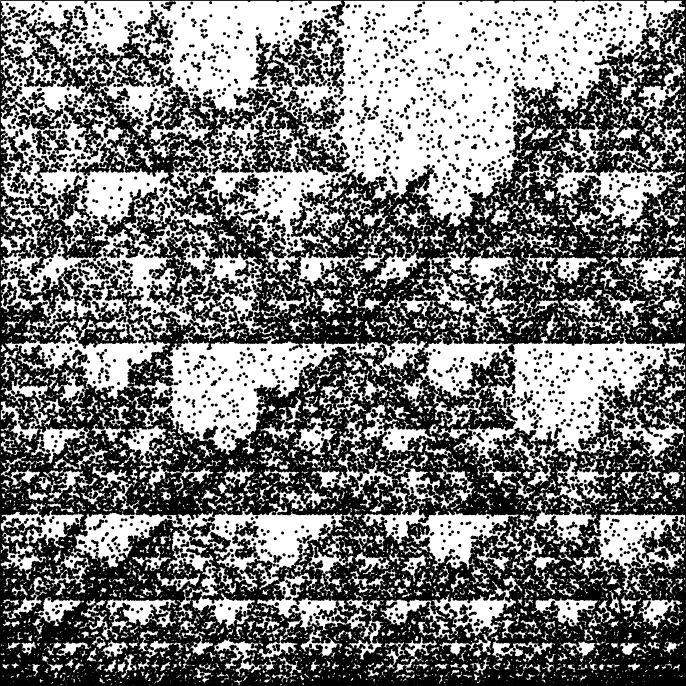

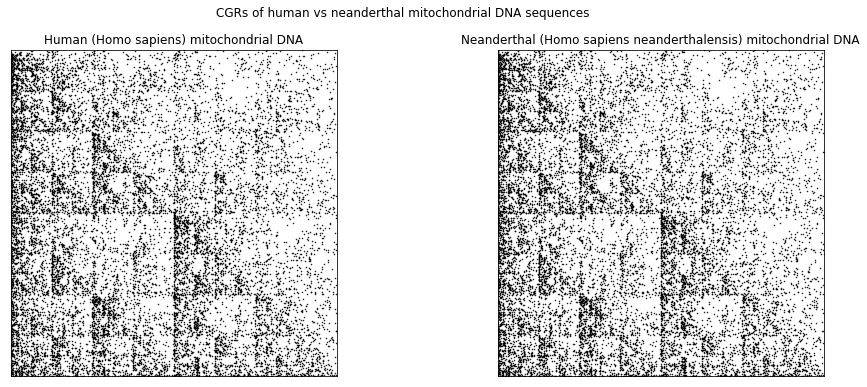

The figure created from the following code shows the chaos game representation, where the \(i\)-th point is placed halfway between the \((i-1)\)-th point and the vertex corresponding to the \(i\)-th base, for various polygons, using a sequence of 50,000 uniformly generated (pseudo)random integers. As we can see below, when the CGR is a square, there is no apparent pattern: the chaos game produced a square uniformly (to the eye) filled with points. This particularly conforms to our intuition: a random sequence should look random. The other polygons appear, in contrast, to exhibit some patterns. These patterns are due to several parts of their attractors overlapping5, or failing to overlap at all, indicated by the the uneven distribution of points (where points do appear); i.e. we can see some darker areas and some lighter areas and even gaps in the figures. Therefore, for this particular chaos game (in which we say that the dividing rate \(r\) is \(0.5\)—we will talk about this in Dividing Rate), the square is the best choice to visualize one-dimensional sequences. We will see in Generalization of CGR that we can use other polygons (we will be referring to them as \(m\)-gons) for CGR to uncover patterns in sequences not with 4 elements by using the appropriate dividing rate \(r\): that is, instead of placing a point half-way to the target vertex, we will place it at some other position along the line, with \(0 < r < 1\). A proper choice of \(r\) will ensure that a random sequence will generate a uniform density of points, possibly on a fractal.

def n_gon(n, *start):

if start:

deg = np.linspace(start[0], start[0] + 360, n+1)

else:

deg = np.linspace(0, 360, n+1)

deg = deg[:-1]

rad = []

for d in deg:

rad.append((d/360)*2*math.pi)

cor = np.zeros((2, n+1))

for r in range(len(rad)):

x = math.cos(rad[r])

y = math.sin(rad[r])

cor[:, r] = np.array([x, y])

cor[:, n] = cor[:, 0]

return cor

def polyCGR(N, n, *start):

if start:

shape_coord = n_gon(n, start[0])

else:

shape_coord = n_gon(n)

dataPoints = np.zeros((2, N))

for i in range(1, N):

r = random.random()

dataPoints[:, i] = 0.5*(dataPoints[:, i-1] + shape_coord[:, math.floor(r*n)])

return(shape_coord, dataPoints)

N = 10000

fig, ((ax1, ax2, ax3), (ax4, ax5, ax6)) = plt.subplots(2, 3, figsize=(12, 8))

fig.suptitle('Chaos game of polygons with different number of vertices')

(coord, points) = polyCGR(N, 4, 45)

ax1.plot(coord[0, :], coord[1, :], 'k')

ax1.plot(points[0, :], points[1, :], 'k.', markersize=1)

ax1.axis('off')

ax1.set_aspect('equal', 'box')

ax1.set_title('Square')

(coord, points) = polyCGR(N, 5, 90)

ax2.plot(coord[0, :], coord[1, :], 'k')

ax2.plot(points[0, :], points[1, :], 'k.', markersize=1)

ax2.axis('off')

ax2.set_aspect('equal', 'box')

ax2.set_title('Pentagon')

(coord, points) = polyCGR(N, 6)

ax3.plot(coord[0, :], coord[1, :], 'k')

ax3.plot(points[0, :], points[1, :], 'k.', markersize=1)

ax3.axis('off')

ax3.set_aspect('equal', 'box')

ax3.set_title('Hexagon')

(coord, points) = polyCGR(N, 7, 90)

ax4.plot(coord[0, :], coord[1, :], 'k')

ax4.plot(points[0, :], points[1, :], 'k.', markersize=1)

ax4.axis('off')

ax4.set_aspect('equal', 'box')

ax4.set_title('Heptagon')

(coord, points) = polyCGR(N, 8)

ax5.plot(coord[0, :], coord[1, :], 'k')

ax5.plot(points[0, :], points[1, :], 'k.', markersize=1)

ax5.axis('off')

ax5.set_aspect('equal', 'box')

ax5.set_title('Octagon')

(coord, points) = polyCGR(N, 9, 90)

ax6.plot(coord[0, :], coord[1, :], 'k')

ax6.plot(points[0, :], points[1, :], 'k.', markersize=1)

ax6.axis('off')

ax6.set_aspect('equal', 'box')

ax6.set_title('Nonagon')

plt.show()

Dividing Rate#

In the previous subsection, we saw different patterns appear for different shapes. We saw that shapes with more than \(4\) vertices seemed to have several parts of the attractor overlapping, producing visibly nonuniform density (some areas were visibly darker than others). Here we will show that this attractor overlapping also happens when choosing a small enough dividing rate \(r\).

To be explicit, the chaos game is not restricted to the “rule” in which the new point has to be placed halfway between the current point and the corresponding vertex as depicted in the previous examples. The new point can be placed anywhere within the line segment created by the two points of reference (or even outside, if you are willing to let the points land outside the convex hull of the vertices). The placement of the new point affects how the attractor of the CGR looks. We will see this in the following example. To quantify the placement of the point, we take the proportion of which the distance between the new point and current point is from the distance between the current point and the corresponding vertex. This is called the dividing rate, \(r\). The new point \(p_{i+1}\) is thus \(p_{i+1} = p_i + r(V-p_i)\). Therefore, when the new point is placed halfway between the current point and the vertex, the dividing rate is \(r = 0.5\), and if the new point is placed closer to the location of the current point, then the dividing rate \(r < 0.5\), whereas if the point is closer to the vertex, then the dividing rate \(r > 0.5\).

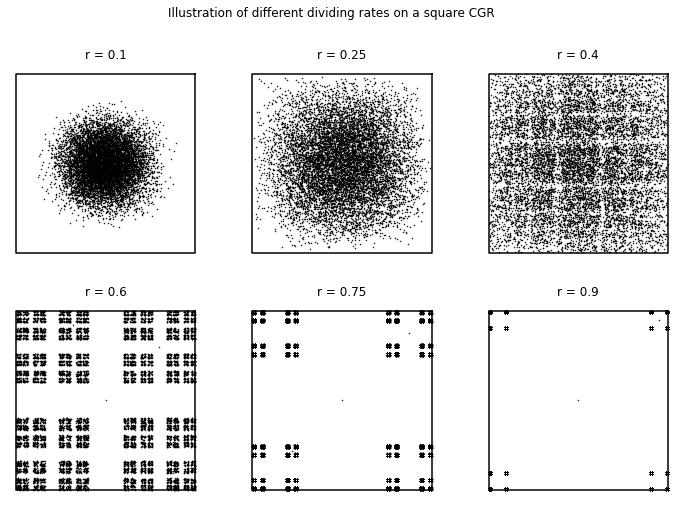

To continue this discussion, let us vary \(r\) for the square-shaped CGR of a sequence of random integers. As we have seen in the previous subsection, the square-shaped CGR did not exhibit any fractal patterns when \(r = 0.5\), so we wondered if we varied \(r\), would this change? The following code gives the square CGRs for \(r = 0.1, 0.25, 0.4, 0.6, 0.75,\) and \(0.9\). From these figures, we see how \(r\) affects the visualization.

def dividingRateCGR(N, r):

shape_coord = n_gon(4, 45)

dataPoints = np.zeros((2, N))

for i in range(1, N):

rand = random.random()

dataPoints[:, i] = dataPoints[:, i-1] + (shape_coord[:, math.floor(4*rand)] - dataPoints[:, i-1])*r

return(shape_coord, dataPoints)

N = 10000

fig, ((ax1, ax2, ax3), (ax4, ax5, ax6)) = plt.subplots(2, 3, figsize=(12, 8))

fig.suptitle('Illustration of different dividing rates on a square CGR')

(coord, points) = dividingRateCGR(N, 0.1)

ax1.plot(coord[0, :], coord[1, :], 'k')

ax1.plot(points[0, :], points[1, :], 'k.', markersize=1)

ax1.axis('off')

ax1.set_aspect('equal', 'box')

ax1.set_title('r = 0.1')

(coord, points) = dividingRateCGR(N, 0.25)

ax2.plot(coord[0, :], coord[1, :], 'k')

ax2.plot(points[0, :], points[1, :], 'k.', markersize=1)

ax2.axis('off')

ax2.set_aspect('equal', 'box')

ax2.set_title('r = 0.25')

(coord, points) = dividingRateCGR(N, 0.4)

ax3.plot(coord[0, :], coord[1, :], 'k')

ax3.plot(points[0, :], points[1, :], 'k.', markersize=1)

ax3.axis('off')

ax3.set_aspect('equal', 'box')

ax3.set_title('r = 0.4')

(coord, points) = dividingRateCGR(N, 0.6)

ax4.plot(coord[0, :], coord[1, :], 'k')

ax4.plot(points[0, :], points[1, :], 'k.', markersize=1)

ax4.axis('off')

ax4.set_aspect('equal', 'box')

ax4.set_title('r = 0.6')

(coord, points) = dividingRateCGR(N, 0.75)

ax5.plot(coord[0, :], coord[1, :], 'k')

ax5.plot(points[0, :], points[1, :], 'k.', markersize=1)

ax5.axis('off')

ax5.set_aspect('equal', 'box')

ax5.set_title('r = 0.75')

(coord, points) = dividingRateCGR(N, 0.9)

ax6.plot(coord[0, :], coord[1, :], 'k')

ax6.plot(points[0, :], points[1, :], 'k.', markersize=1)

ax6.axis('off')

ax6.set_aspect('equal', 'box')

ax6.set_title('r = 0.9')

plt.show()

For \(r < 0.5\), we can see that some patterns appear, especially those with a dividing rate close to \(r = 0.5\). More importantly, we see a nonuniformity of distribution in the square. In contrast, we can see for \(r = 0.6\) that the points fill the corners of the plot area, but they are unevenly-spaced (in comparison to what we have seen for \(r = 0.5\)). This suggests that when \(r < 0.5\), the attractors are overlapping, indicated by the darker regions of the plot due to the points being more densely packed, and that when \(r>0.5\) the attractor lies on a fractal and is apparently uniformly distributed thereon. For \(r\) significantly less than \(1/2\), we can see that the CGR becomes more circular with the points spaced more closely together. From this, it infers that as \(r\) decreases, more of the attractors overlap.

For \(r > 0.5\), we can see that a different pattern appears (compared to those with a dividing rate \(r < 0.5\)). The patterns shown for the CGRs with a dividing ratio \(r > 0.5\) visibly resemble each other. The \(r = 0.9\) case looks like a smaller version of the \(r = 0.75\) case, which similarly, looks like a sparser version of the \(r = 0.6\) case. In contrast to the CGR with the dividing rate \(r = 0.5\), we can see that the points are spread apart from each other. This can possibly indicate that the attractors do not overlap, and even do not touch each other. Although this can be used to distinguish any nonrandomness, it would be much more difficult to see with the human eye than using the dividing rate \(r = 0.5\).

For applications which need a different number of vertices than \(m=4\), it turns out to be optimal to find a dividing rate \(r\) for different polygons that can offer maximum packing of nested, non-overlapping attractors, in order to help find a pattern in a given sequence. In the next subsection, we look at this optimal value of \(r\) as reported in the literature.

Generalization of CGR#

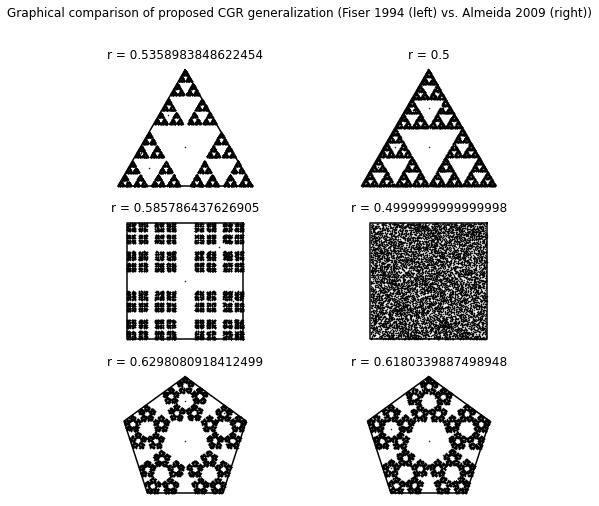

In [Fiser et al., 1994], Fiser et al. generalized CGR to be applicable for sequences of any number of elements \(m > 2\). There are many ways of generalizing CGR, some of which are highlighted in [Almeida and Vinga, 2009], but the generalization the authors of the earlier article chose was to use an \(m\)-sided polygon (which they refer to as an \(m\)-gon), where \(m\) was the number of elements in a sequence that should be represented [Fiser et al., 1994]. As we saw previously in Different Polygons, the attractors of the \(m\)-gons for when \(m > 4\) and \(r=0.5\) overlapped as shown by the uneven distribution of the points. Because of this, the picture is ambiguous and is not as useful to serve as a way to identity patterns in sequences. To combat this, Fiser et al. introduced a formula to calculate the dividing rate for \(m\)-gons for different values of \(m\): by putting each attractor piece inside a non-overlapping circle, they derived

Although the dividing rate calculated from this formula does prevent attractors from overlapping, Almeida and Vinga noticed in [Almeida and Vinga, 2009] that the resulting attractors are not optimally packed. For example, for \(m = 4\), the solution gives a dividing rate \(r = 0.585786\) instead of \(r = 0.5\), which works perfectly well (and indeed is maybe better). In [Almeida and Vinga, 2009], the authors gave a more complicated formula for the dividing rate, which they say they derived by using basic trigonometry to pack polygons (instead of circles) into the outer \(m\)-gon. We have simplified their formula considerably to get

where

If we use \(k= (m+2)/4\) without rounding to an integer, this formula simplifies to the previous one. This fact was not noted in [Almeida and Vinga, 2009]. We note some special values: when \(m=3\) or \(m=4\), this formula gives \(r=0.5\). When \(m=5\), this formula gives \(r = (\sqrt{5}-1)/2 = 0.618\ldots\). Various other \(m\) give algebraic values. When \((m+2)/4\) is half-way between integers, either choice of rounding for \(k\) gives the same \(r\).

The figure created from the following code (the figure appears immediately after the code) shows the generalized CGRs for various \(m\)-gons using the division formula from [Fiser et al., 1994] (left) and [Almeida and Vinga, 2009] (right).

def dividingRateFiser(n):

return (1 + math.sin(math.pi/n))**(-1)

def dividingRateAlmeida(n):

k = round((n+2.)/4.)

s_num = 2*math.cos(math.pi*((1/2) - (k/n))) - 2*math.cos(math.pi*((1/2)-(1/(2*n))))*math.cos((2*k-1)*(math.pi/(2*n)))*(1 + (math.tan((2*k-1)*(math.pi/(2*n))))/(math.tan(math.pi-((n+2*k-2)*(math.pi/(2*n))))))

s_den = 2*math.cos(math.pi*((1/2) - (k/n)))

return s_num/s_den

def generalizedCGR(N, divide_func, n, *start):

if start:

shape_coord = n_gon(n, start[0])

else:

shape_coord = n_gon(n)

r = divide_func(n)

dataPoints = np.zeros((2, N))

for i in range(1, N):

rand = random.random()

dataPoints[:, i] = dataPoints[:, i-1] + (shape_coord[:, math.floor(n*rand)] - dataPoints[:, i-1])*r

return(r, shape_coord, dataPoints)

N = 10000

fig, ((ax1, ax2), (ax3, ax4), (ax5, ax6)) = plt.subplots(3, 2, figsize=(8, 8))

fig.suptitle('Graphical comparison of proposed CGR generalization (Fiser 1994 (left) vs. Almeida 2009 (right))')

(r, coord, points) = generalizedCGR(N, dividingRateFiser, 3, 90)

ax1.plot(coord[0, :], coord[1, :], 'k')

ax1.plot(points[0, :], points[1, :], 'k.', markersize=1)

ax1.axis('off')

ax1.set_aspect('equal', 'box')

ax1.set_title('r = {}'.format(r))

(r, coord, points) = generalizedCGR(N, dividingRateAlmeida, 3, 90)

ax2.plot(coord[0, :], coord[1, :], 'k')

ax2.plot(points[0, :], points[1, :], 'k.', markersize=1)

ax2.axis('off')

ax2.set_aspect('equal', 'box')

ax2.set_title('r = {}'.format(r))

(r, coord, points) = generalizedCGR(N, dividingRateFiser, 4, 45)

ax3.plot(coord[0, :], coord[1, :], 'k')

ax3.plot(points[0, :], points[1, :], 'k.', markersize=1)

ax3.axis('off')

ax3.set_aspect('equal', 'box')

ax3.set_title('r = {}'.format(r))

(r, coord, points) = generalizedCGR(N, dividingRateAlmeida, 4, 45)

ax4.plot(coord[0, :], coord[1, :], 'k')

ax4.plot(points[0, :], points[1, :], 'k.', markersize=1)

ax4.axis('off')

ax4.set_aspect('equal', 'box')

ax4.set_title('r = {}'.format(r))

(r, coord, points) = generalizedCGR(N, dividingRateFiser, 5, 90)

ax5.plot(coord[0, :], coord[1, :], 'k')

ax5.plot(points[0, :], points[1, :], 'k.', markersize=1)

ax5.axis('off')

ax5.set_aspect('equal', 'box')

ax5.set_title('r = {}'.format(r))

(r, coord, points) = generalizedCGR(N, dividingRateAlmeida, 5, 90)

ax6.plot(coord[0, :], coord[1, :], 'k')

ax6.plot(points[0, :], points[1, :], 'k.', markersize=1)

ax6.axis('off')

ax6.set_aspect('equal', 'box')

ax6.set_title('r = {}'.format(r))

plt.show()

CGR Activity 3

Using the provided code (or if you’re brave, write your own), create a generalized CGR of a 6, 9, and 12-sided polygon (using the dividing rate formula by Almeida). [What happened when we did this]

In the next section, we will look at applications of CGR, mainly biological and mathematical. For the following examples, unless otherwise specified, assume that \(r = 0.5\).

CGR of Biological Sequences#

The chaos game representation has been applied to biological areas. In this section, we will look at how the chaos game has been applied to DNA and protein (amino acids) sequencing and visualizations. We also will give a brief overview of the Map of Life, an extension of the visualization of DNA sequences. The Map of Life shows potential in allowing researchers to quantitatively classify species that was once unclear in its taxonomy.

Nucleotide Sequences#

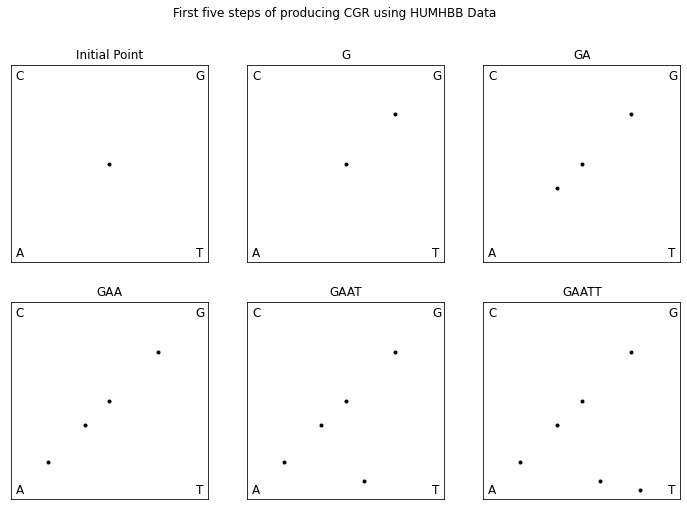

H. J. Jeffrey was the first to propose using the chaos game representation as a novel way of visualizing nucleotide sequences. This revealed previously unknown patterns in certain proteins [Jeffrey, 1990, Jeffrey, 1992]. The genetic sequence is made up of four bases: adenine (A), guanine (G), cytosine (C), and either thymine (T) or uracil (U) for DNA or RNA, respectively. Using a square-shaped CGR, we label the four corners with the name of each base. In this paper, A is in the bottom-left corner, C in the top-left, G in the top-right, and U/T in the bottom right. Rather than use a random number generator to determine which map to use for each iteration as we did in Chaos Game, we follow the genetic sequence that we want to create a CGR for.

To demonstrate the chaos game for DNA sequences, let us walk through plotting the first five bases of the DNA sequence HUMHBB (human beta globin region, chromosome 11), “GAATT”, shown in Fig. 8. We first mark the center as our initial point. The first base in the sequence is “G” so, we plot a point half way between our initial point and the “G” corner. The next base in the sequence is “A” so we plot a point half way between the point that we just plotted and the “A” corner. The following is the code to recreate the CGR.

def parse_sequence(filename):

with open(filename) as inputfile:

next(inputfile)

results = list(csv.reader(inputfile))

seq = ''

for r in results[:-1]:

seq = seq + r[0]

return seq

def dna_cgr(seq):

N = len(seq)

dataPoints = np.zeros((2, N+1))

dataPoints[:, 0] = np.array([0.5, 0.5])

for i in range(1, N+1):

if seq[i-1] == 'A':

corner = np.array([0, 0])

elif seq[i-1] == 'C':

corner = np.array([0, 1])

elif seq[i-1] == 'G':

corner = np.array([1, 1])

else:

corner = np.array([1, 0])

dataPoints[:, i] = 0.5*(dataPoints[:, i-1] + corner)

return(dataPoints)

humhbb_dna = parse_sequence('../Supplementary Material/CGR/FASTA Files/HUMHBB.fasta')

coord = dna_cgr(humhbb_dna[:5])

fig, ((ax1, ax2, ax3), (ax4, ax5, ax6)) = plt.subplots(2, 3, figsize=(12, 8))

fig.suptitle('First five steps of producing CGR using HUMHBB Data')

ax1.plot(coord[0, :1], coord[1, :1], 'k.')

ax1.axes.xaxis.set_visible(False)

ax1.axes.yaxis.set_visible(False)

ax1.set_xlim([0, 1])

ax1.set_ylim([0, 1])

ax1.set_aspect('equal', 'box')

ax1.text(0.025, 0.025, 'A', fontsize=12)

ax1.text(0.025, 0.925, 'C', fontsize=12)

ax1.text(0.94, 0.925, 'G', fontsize=12)

ax1.text(0.94, 0.025, 'T', fontsize=12)

ax1.set_title('Initial Point')

ax2.plot(coord[0, :2], coord[1, :2], 'k.')

ax2.axes.xaxis.set_visible(False)

ax2.axes.yaxis.set_visible(False)

ax2.set_xlim([0, 1])

ax2.set_ylim([0, 1])

ax2.set_aspect('equal', 'box')

ax2.text(0.025, 0.025, 'A', fontsize=12)

ax2.text(0.025, 0.925, 'C', fontsize=12)

ax2.text(0.94, 0.925, 'G', fontsize=12)

ax2.text(0.94, 0.025, 'T', fontsize=12)

ax2.set_title('G')

ax3.plot(coord[0, :3], coord[1, :3], 'k.')

ax3.axes.xaxis.set_visible(False)

ax3.axes.yaxis.set_visible(False)

ax3.set_xlim([0, 1])

ax3.set_ylim([0, 1])

ax3.set_aspect('equal', 'box')

ax3.text(0.025, 0.025, 'A', fontsize=12)

ax3.text(0.025, 0.925, 'C', fontsize=12)

ax3.text(0.94, 0.925, 'G', fontsize=12)

ax3.text(0.94, 0.025, 'T', fontsize=12)

ax3.set_title('GA')

ax4.plot(coord[0, :4], coord[1, :4], 'k.')

ax4.axes.xaxis.set_visible(False)

ax4.axes.yaxis.set_visible(False)

ax4.set_xlim([0, 1])

ax4.set_ylim([0, 1])

ax4.set_aspect('equal', 'box')

ax4.text(0.025, 0.025, 'A', fontsize=12)

ax4.text(0.025, 0.925, 'C', fontsize=12)

ax4.text(0.94, 0.925, 'G', fontsize=12)

ax4.text(0.94, 0.025, 'T', fontsize=12)

ax4.set_title('GAA')

ax5.plot(coord[0, :5], coord[1, :5], 'k.')

ax5.axes.xaxis.set_visible(False)

ax5.axes.yaxis.set_visible(False)

ax5.set_xlim([0, 1])

ax5.set_ylim([0, 1])

ax5.set_aspect('equal', 'box')

ax5.text(0.025, 0.025, 'A', fontsize=12)

ax5.text(0.025, 0.925, 'C', fontsize=12)

ax5.text(0.94, 0.925, 'G', fontsize=12)

ax5.text(0.94, 0.025, 'T', fontsize=12)

ax5.set_title('GAAT')

ax6.plot(coord[0, :6], coord[1, :6], 'k.')

ax6.axes.xaxis.set_visible(False)

ax6.axes.yaxis.set_visible(False)

ax6.set_xlim([0, 1])

ax6.set_ylim([0, 1])

ax6.set_aspect('equal', 'box')

ax6.text(0.025, 0.025, 'A', fontsize=12)

ax6.text(0.025, 0.925, 'C', fontsize=12)

ax6.text(0.94, 0.925, 'G', fontsize=12)

ax6.text(0.94, 0.025, 'T', fontsize=12)

ax6.set_title('GAATT')

plt.show()

coord = dna_cgr(humhbb_dna)

plt.plot(coord[0, :], coord[1, :], 'k.', markersize=1)

plt.gca().set_aspect('equal', adjustable='box')

plt.axis('off')

plt.show()

The finished CGR is shown in Fig. 8 at the start of this section, which certainly has an identifiable fractal pattern. The most prominent repeated pattern is the unshaded area in the top right quadrant of the figure, referred to as the “double scoop” appearance by [Jeffrey, 1990], which actually appears in almost all vertebrate DNA sequences. This pattern is due to the fact that there is a comparative sparseness of guanine following cytosine in the gene sequence since CG dinucleotides are prone to methylation and subsequently mutation.

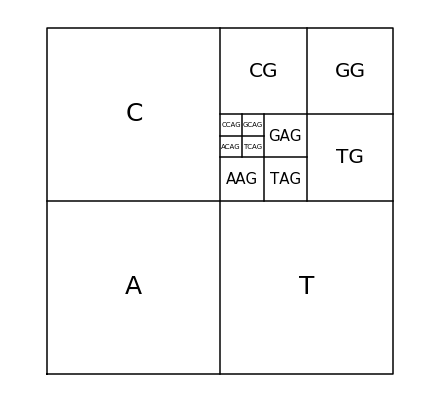

To fully understand the double scoop pattern, we must understand the biological meaning of the CGR. Each point plotted in the CGR corresponds to a base, and depending on where it is placed, we can trace back and figure out parts of the sequence we are examining [Goldman, 1993]. Fig. 10 shows the relationship corresponding area of the CGR and the DNA sequence. In reference to this figure, we can see that for any point that corresponds with base G, it will be located in the upper right quadrant of the CGR plot. To see what the previous base is, we can divide the quadrant into sub-quadrants (labeling them in the same order as the quadrants), and depending on where the point is, we can determine what the previous base of the sequence is. We can repeat this step again and again to find the order in which the bases appear in the sequence. Fig. 11 shows a CGR square where all the CG quadrants are unfilled. We can see from this figure that even though it is not a real CGR (since we did not use a sequence to produce it), we still get the same double scoop pattern found in Fig. 8, displayed at the beginning of the unit. By identifying regions of the CGR square in this way, it is possible to identify features of DNA sequences that correspond to patterns of the CGR.

Fig. 10 Correspondence between DNA sequences and areas of the CGR of DNA sequences#

Fig. 11 Explanation of the double scoop pattern—plot of CGR square with all CG quadrants unfilled.#

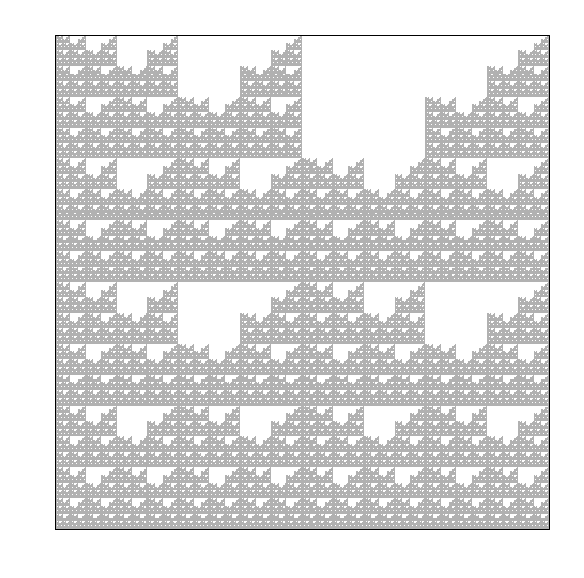

The following shows other examples of CGRs for DNA sequences. We can see that the CGR of DNA sequence of human herpesvirus strain also exhibit the double scoop pattern that we have seen for HUMHBB. This agrees with what was mentioned earlier in that it is common to see the lack of C and G dinucleotides together within the human genome. The CGR of the DNA sequence of the chloroplast of quinoa plant, on the other hand, does not show a double scoop pattern. We can see from using the CGR of DNA sequences, we are able to distinguish between different species very easily.

herpesvirus_dna = parse_sequence('../Supplementary Material/CGR/FASTA Files/KF021605.fasta')

herpesvirus_coord = dna_cgr(herpesvirus_dna)

chloroplast_dna = parse_sequence('../Supplementary Material/CGR/FASTA Files/CM008430.fasta')

chloroplast_coord = dna_cgr(chloroplast_dna)

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(16, 6))

fig.suptitle('More example of CGRs of DNA sequences')

ax1.plot(herpesvirus_coord[0, :], herpesvirus_coord[1, :], 'k.', markersize=1)

ax1.axes.xaxis.set_visible(False)

ax1.axes.yaxis.set_visible(False)

ax1.set_xlim([0, 1])

ax1.set_ylim([0, 1])

ax1.set_aspect('equal', 'box')

ax1.set_title('Human herpesvirus 5 strain TR, complete genome')

ax2.plot(chloroplast_coord[0, :], chloroplast_coord[1, :], 'k.', markersize=1)

ax2.axes.xaxis.set_visible(False)

ax2.axes.yaxis.set_visible(False)

ax2.set_xlim([0, 1])

ax2.set_ylim([0, 1])

ax2.set_aspect('equal', 'box')

ax2.set_title('Chenopodium quinoa cultivar Real Blanca cholorplast, complete sequence')

plt.show()

CGR Activity 4

Produce your own CGR of DNA sequences of your chosing. Pick sequences that are from difference species, such as plants, fungi, protists, etc. It is recommended that the length of the sequence does not exceed 50000 bp. Compare the CGRs of the difference species visually? Are there any species in which their CGRs are similar to one another? How about species in which their CGRs are most different from each other? [What happened when we did this]

Map of Life#

Taking DNA sequence visualization one step further, the authors of [Kari et al., 2013] proposed a novel combination of methods to create Molecular Distance Maps. Molecular distance maps visually illustrate the quantitative relationships and patterns of proximities among the given genetic sequences and among the species they represent. To compute and visually display relationships between DNA sequences, the three techniques that were used include chaos game representation, structural dissimilarity index (DSSIM), and multi-dimensional scaling. In the paper [Kari et al., 2013], this method was applied to a variety of cases that have been historically controversial and was there demonstrated to have the potential for taxonomical clarification. In the following, we will discuss the methods used from this paper, more particularly the structural dissimilarity index and the multi-dimensional scaling as we have already looked into the chaos game representation for nucleotides.

To understand the structural dissimilarity index, we will first have to explain what the structural similarity index is. The structural similarity (SSIM) index is a method for measuring the similarity between two images based on the computation of three terms, namely luminance distortion, contrast distortion, and linear correlation [Wang et al., 2004]. It was designed to perform similarly to the human visual system, which is highly adapted to extract structural information. The overall index is a multiplicative combination of the three terms:

where

where \(\mu_{x}\), \(\mu_{y}\), \(\sigma_{x}\), \(\sigma_{y}\) and \(\sigma_{xy}\) are the local means, standard deviations, and cross-covariance for images \(x\) and \(y\). \(C_{1}\), \(C_{2}\), and \(C_{3}\) are the regularization constants for the luminance, contrast, and structural terms, respectively. If \(\alpha = \beta = \gamma = 1\), and \(C_{3} = \frac{C_{2}}{2}\), the index simplifies to:

The theoretical range of \(\operatorname{SSIM}(x, y) \in [-1, 1]\), where the high value indicates high similarity. In [Kari et al., 2013], instead of calculating the overall SSIM, they computed the local SSIM value for each pixel in the image \(x\). They refer to this as a distance matrix. The SSIM index can be computed in Python by using the function structural_similarity from the skimage.metrics library.

Now that we know how to compute the SSIM index, we are able to compute the structural dissimilarity (DSSIM) index:

whose theoretical range is \([0, 2]\) with the distance being 0 between two identical images, and 2 if the two images are negatively correlated. An example of two images that are negatively correlated would be if one is completely white, while the other is completely black. Typically, the range that DSSIM falls with is \([0, 1]\)—the authors from [Kari et al., 2015] noted that almost all (over 5 million) distances that found were between \(0\) and \(1\), with only half a dozen exceptions of distances between \(1\) and \(1.0033\).

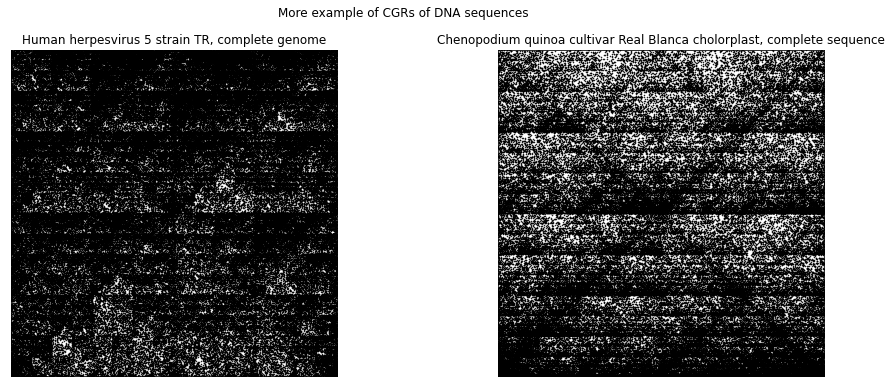

To demonstrate this, let us compare the “anatomically modern” human (Homo sapiens sapiens) mitochondrial DNA and the neanderthal (Homo sapiens neanderthalensis) mitochondrial DNA (mtDNA). As seen from their scientific classification, we can see that these two species are from the same genus, which suggests that their DNA should be similar. The following shows the CGRs of the “anatomically modern” human (left) and of the neanderthal (right). We can see that the two CGRs are quite similar (which is agrees with the fact that the two species are from the same genus) and therefore, we expect the the DSSIM to be small.

human_dna = parse_sequence('../Supplementary Material/CGR/FASTA Files/NC_012920.fasta')

human_coord = dna_cgr(human_dna)

neanderthal_dna = parse_sequence('../Supplementary Material/CGR/FASTA Files/NC_011137.fasta')

neanderthal_coord = dna_cgr(neanderthal_dna)

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(16, 6))

fig.suptitle('CGRs of human vs neanderthal mitochondrial DNA sequences')

ax1.plot(human_coord[0, :], human_coord[1, :], 'k.', markersize=1)

ax1.axes.xaxis.set_visible(False)

ax1.axes.yaxis.set_visible(False)

ax1.set_xlim([0, 1])

ax1.set_ylim([0, 1])

ax1.set_aspect('equal', 'box')

ax1.set_title('Human (Homo sapiens) mitochondrial DNA')

ax2.plot(neanderthal_coord[0, :], neanderthal_coord[1, :], 'k.', markersize=1)

ax2.axes.xaxis.set_visible(False)

ax2.axes.yaxis.set_visible(False)

ax2.set_xlim([0, 1])

ax2.set_ylim([0, 1])

ax2.set_aspect('equal', 'box')

ax2.set_title('Neanderthal (Homo sapiens neanderthalensis) mitochondrial DNA')

plt.show()

In Python, in order to compare these two plots, we first need to convert each plot into a matrix. The size of the matrix is determined by the dimension of the plot (number of pixels), and each element within the matrix represents each pixel in the plot, where \(0\) represents a white pixel, \(1\) represents a black pixel, and any value in between represents a pixel that is a shade of grey. The simplest way to convert the plot to a matrix is to first save the image as a .png file. In the following code block, we show the way that we like to save the figures—the additional settings in the savefig() call removes the white border around the plot.

# In order to find SSIM, need to save figures as an image file.

# Below is an example of how you would save your figures as a png file.

plt.plot(human_coord[0, :], human_coord[1, :], 'k.', markersize=1)

plt.xticks([])

plt.yticks([])

plt.xlim([0, 1])

plt.ylim([0, 1])

plt.gca().set_aspect('equal', 'box')

plt.savefig('../Supplementary Material/CGR/Output/human.png', bbox_inches='tight', pad_inches=0)

# In order to find SSIM, need to save figures as an image file.

# Below is an example of how you would save your figures as a png file.

plt.plot(neanderthal_coord[0, :], neanderthal_coord[1, :], 'k.', markersize=1)

plt.xticks([])

plt.yticks([])

plt.xlim([0, 1])

plt.ylim([0, 1])

plt.gca().set_aspect('equal', 'box')

plt.savefig('../Supplementary Material/CGR/Output/neanderthal.png', bbox_inches='tight', pad_inches=0)

Once we have saved the images as a .png file, we use Python’s skimage.io.imread() function to read in the images as matrices. We also specify in the parameters of this function that this image is greyscale, which returns an \(m\times n\) matrix (\(m\) and \(n\) are dependent on the dimensions of the image). If the parameter as_gray was not set to True, then the output would be a \(m \times n \times 3\), which accounts for the different color channels (RGB).

human_CGR = skimage.io.imread('../Supplementary Material/CGR/Output/human.png', as_gray=True)

neanderthal_CGR = skimage.io.imread('../Supplementary Material/CGR/Output/neanderthal.png', as_gray=True)

Now that the two images have been converted to matrices, we use Python’s structural_similarity function from the skimage.metrics library (aliased as ssim below) and calculate the structural similarity index between the two images.

human_neanderthal_ssim = ssim(human_CGR, neanderthal_CGR)

print(human_neanderthal_ssim)

0.8968926645376538

and therefore, the structural dissimilarity index is then

1 - human_neanderthal_ssim

0.10310733546234618

which agrees with our expectations.

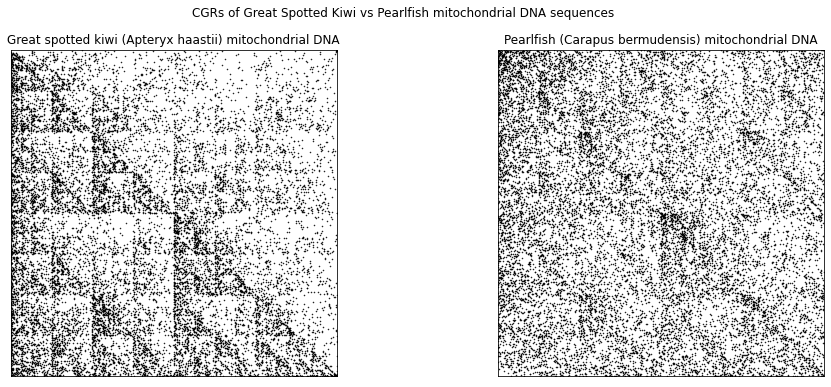

In the following, we look at another example that compares the mtDNA of humans to other species, such as the great spotted kiwi (left) and pearlfish (right).

kiwi_dna = parse_sequence('../Supplementary Material/CGR/FASTA Files/NC_002782.fasta')

kiwi_coord = dna_cgr(kiwi_dna)

pearlfish_dna = parse_sequence('../Supplementary Material/CGR/FASTA Files/NC_004373.fasta')

pearlfish_coord = dna_cgr(pearlfish_dna)

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(16, 6))

fig.suptitle('CGRs of Great Spotted Kiwi vs Pearlfish mitochondrial DNA sequences')

ax1.plot(kiwi_coord[0, :], kiwi_coord[1, :], 'k.', markersize=1)

ax1.axes.xaxis.set_visible(False)

ax1.axes.yaxis.set_visible(False)

ax1.set_xlim([0, 1])

ax1.set_ylim([0, 1])

ax1.set_aspect('equal', 'box')

ax1.set_title('Great spotted kiwi (Apteryx haastii) mitochondrial DNA')

ax2.plot(pearlfish_coord[0, :], pearlfish_coord[1, :], 'k.', markersize=1)

ax2.axes.xaxis.set_visible(False)

ax2.axes.yaxis.set_visible(False)

ax2.set_xlim([0, 1])

ax2.set_ylim([0, 1])

ax2.set_aspect('equal', 'box')

ax2.set_title('Pearlfish (Carapus bermudensis) mitochondrial DNA')

plt.show()

# In order to find SSIM, need to save figures as an image file.

# Below is an example of how you would save your figures as a png file.

plt.plot(kiwi_coord[0, :], kiwi_coord[1, :], 'k.', markersize=1)

plt.xticks([])

plt.yticks([])

plt.xlim([0, 1])

plt.ylim([0, 1])

plt.gca().set_aspect('equal', 'box')

plt.savefig('../Supplementary Material/CGR/Output/greatspottedkiwi.png', bbox_inches='tight', pad_inches=0)

# In order to find SSIM, need to save figures as an image file.

# Below is an example of how you would save your figures as a png file.

plt.plot(pearlfish_coord[0, :], pearlfish_coord[1, :], 'k.', markersize=1)

plt.xticks([])

plt.yticks([])

plt.xlim([0, 1])

plt.ylim([0, 1])

plt.gca().set_aspect('equal', 'box')

plt.savefig('../Supplementary Material/CGR/Output/pearlfish.png', bbox_inches='tight', pad_inches=0)

Just visually, the CGR of the mtDNA of the great spotted kiwi looks more similar to the CGRs of the human and neanderthal than the CGR of the pearlfish. Using all of the DSSIM, we can produce what is called a distance matrix, which is a square, real, symmetric matrix where each element is the structural dissimilarity index of two species with corresponds to the row and column. The distance matrix for the four species that we have already produced the CGR for is

def dist_matrix(cgr_list):

n = len(cgr_list)

dmat = np.zeros((n, n))

for i in range(n):

for j in range(i+1, n):

dmat[i, j] = 1 - ssim(cgr_list[i], cgr_list[j])

dmat[j, i] = dmat[i, j]

return(dmat)

kiwi_CGR = skimage.io.imread('../Supplementary Material/CGR/Output/greatspottedkiwi.png', as_gray=True)

pearlfish_CGR = skimage.io.imread('../Supplementary Material/CGR/Output/pearlfish.png', as_gray=True)

CGR_list = [human_CGR, neanderthal_CGR, kiwi_CGR, pearlfish_CGR]

dssim = dist_matrix(CGR_list)

dssim

array([[0. , 0.10310734, 0.76524434, 0.85537312],

[0.10310734, 0. , 0.76727659, 0.85644889],

[0.76524434, 0.76727659, 0. , 0.85483255],

[0.85537312, 0.85644889, 0.85483255, 0. ]])

where the first row/column represents humans, the second row/column represents the neanderthals, the third row/column represents the great spotted kiwi, and lastly, the fourth row/column represents the pearlfish. Obviously, the diagonal of the matrix would be \(0\), since the DSSIM of two of the same images would produce a result of 0. As we have seen previously, the \(\mathrm{DSSIM}(\mathrm{human},\ \mathrm{neanderthal}) = 0.10310734\), and vice versa. From this matrix, we can see that the CGR of the mtDNA of pearlfish is most different than the rest—this agrees with what we have observed.

Multi-dimensional scaling (MDS) is a means of visualizing the level of similarity or dissimilarity of individual case of a dataset. It has been used in various fields such as cognitive science, information science, pychometrics, marketing, ecology, social science and other areas of study [Borg and Groenen, 2005]. The goal of MDS is to find a spatial configuration of objects when all that is known is some measure of their general similarity or dissimilarity [Wickelmaier, 2003]. Here, the MDS takes in the distance matrix (of size \(n\) by \(n\)) and outputs a \(n\) by \(p\) configuration matrix, where each row of this matrix represents the coordinates of the item in \(p\) dimension. In this unit, we will only be looking at \(p = 2\) and \(p = 3\) as it is difficult to create visualization in dimensions greater than 3. In [Kari et al., 2013], the authors used a classical MDS, which assumes that all the distances (from the distance matrix) are Euclidean. For the algorithm for classical MDS, refer to [Wickelmaier, 2003](p. 10). Although Python’s scikit-learn library has a MDS function (sklearn.manifold.MDS()), we provide a different function that performs classical multidimensional scaling below. The following function computes the coordinates of the points under the new map much quicker than the MDS function in the scikit-learn library.

# Function taken from https://pyseer.readthedocs.io/en/master/_modules/pyseer/cmdscale.html

def cmdscale(D):

"""Classical multidimensional scaling (MDS)

Args:

D (numpy.array)

Symmetric distance matrix (n, n)

Returns:

Y (numpy.array)

Configuration matrix (n, p). Each column represents a dimension. Only the

p dimensions corresponding to positive eigenvalues of B are returned.

Note that each dimension is only determined up to an overall sign,

corresponding to a reflection.

e (numpy.array)

Eigenvalues of B (n, 1)

"""

# Number of points

n = len(D)

# Centering matrix

H = np.eye(n) - np.ones((n, n))/n

# YY^T

B = -H.dot(D**2).dot(H)/2

# Diagonalize

evals, evecs = np.linalg.eigh(B)

# Sort by eigenvalue in descending order

idx = np.argsort(evals)[::-1]

evals = evals[idx]

evecs = evecs[:, idx]

# Compute the coordinates using positive-eigenvalued components only

w, = np.where(evals > 0)

L = np.diag(np.sqrt(evals[w]))

V = evecs[:, w]

Y = V.dot(L)

return Y, evals[evals > 0]

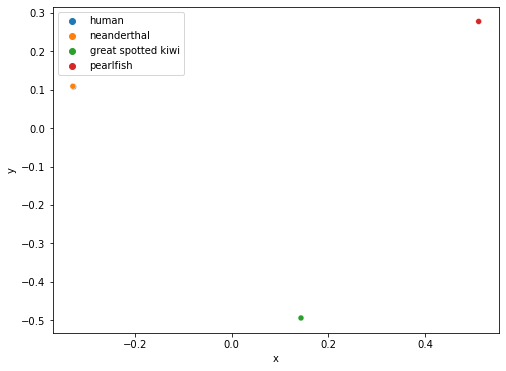

We perform the classical MDS on the dissimilarity matrix dssim calculated above. Even though the cmdscale function returns two outputs, we only require the first output (the configuration matrix) for our visualization. We can also converted the matrix into a pandas dataframe to clarify which row corresponds which animal.

Y, _ = cmdscale(dssim)

Y_df = pd.DataFrame(data=Y, columns = ['x', 'y', 'z'])

Y_df['animal'] = ['human', 'neanderthal', 'great spotted kiwi', 'pearlfish']

Y_df

| x | y | z | animal | |

|---|---|---|---|---|

| 0 | -0.326245 | 0.107564 | 0.051616 | human |

| 1 | -0.327661 | 0.109078 | -0.051471 | neanderthal |

| 2 | 0.143462 | -0.494346 | -0.000133 | great spotted kiwi |

| 3 | 0.510445 | 0.277704 | -0.000012 | pearlfish |

To plot the points in in 2 dimensions, we use the first two columns as our \(x\) and \(y\) coordinates, respectively. Here, we decide to use the seaborn data visualization library (which is based on matplotlib) as this library specializes in dealing with data stored in a dataframe.

fig, ax = plt.subplots()

fig.set_size_inches(8, 6)

sns.scatterplot(x='x', y='y', hue='animal', data=Y_df)

legend = ax.legend()

plt.show()

From the plot above, we are able to observe the degree of difference between the four CGRs by the distance between the four points. Since the human and neanderthal points overlap each other, this indicates that their CGRs are very similar, thus implying that the mtDNA of human and neanderthals are also similar. If we were interested in knowing whether humans are more similar to the great spotted kiwi or pearlfish, we can easily see from the plot above that humans are closer related to the great spotted kiwi genetically since the distance between the human and great spotted kiwi points are closer than the distance between the human and pearlfish points.

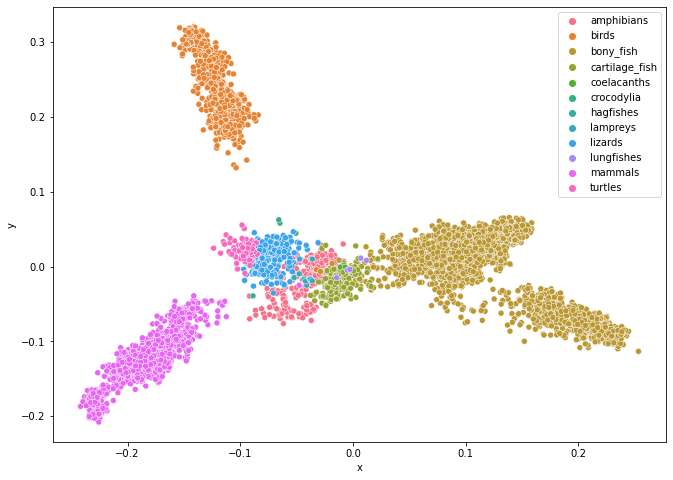

Now that we understand the idea of using classical multidimensional scaling, we can apply this method to a large set of animals (in this case 4844). This will allow us to visualize how similar the animals are genetically to each other, which could potentially help with classifying animals in which their class is ambiguous.

Since it takes an extremely long time to calculate the dissimilarity matrix for 4844 vertebrate animals (it took us around 8 hours to compute the dissimilarity matrix in parallel with 12 threads, using an AMD Ryzen 5 3600X 6-Core Processor), we have already calculated it for you and provided the data file, which can be found in the Supplementary Material folder in our Github repository. The code used to create the dissimilarity matrix is provided in the code folder of our repository (read the README.md file for instructions on the order to run the code). Now, let us apply the classical MDS to the dissimilarity matrix provided.

# Load list of animals and their classes. This will be used to colour the points on the scatter plot

file = open("../Supplementary Material/CGR/Data Files/animal_class.pkl", "rb")

animal_list = pickle.load(file)

file.close()

animal_class = list(animal_list.values())

DSSIM = np.load('../Supplementary Material/CGR/Data Files/DSSIM_matrix.dat', allow_pickle=True)

coord, _ = cmdscale(DSSIM)

coord_splice = coord[:, :3]

cmdscaling_df = pd.DataFrame(data = coord_splice, columns = ['x', 'y', 'z'])

cmdscaling_df['class'] = animal_class

cmdscaling_df.head()

| x | y | z | class | |

|---|---|---|---|---|

| 0 | -0.044784 | -0.017598 | 0.023091 | amphibians |

| 1 | -0.040420 | 0.012079 | 0.012869 | amphibians |

| 2 | -0.044732 | -0.027890 | 0.028080 | amphibians |

| 3 | -0.023975 | 0.015180 | 0.030752 | amphibians |

| 4 | -0.053848 | -0.068480 | 0.015756 | amphibians |

fig = plt.figure(figsize=(11, 8))

ax = sns.scatterplot(x='x', y='y', hue='class', data=cmdscaling_df)

legend = ax.legend()

plt.show()

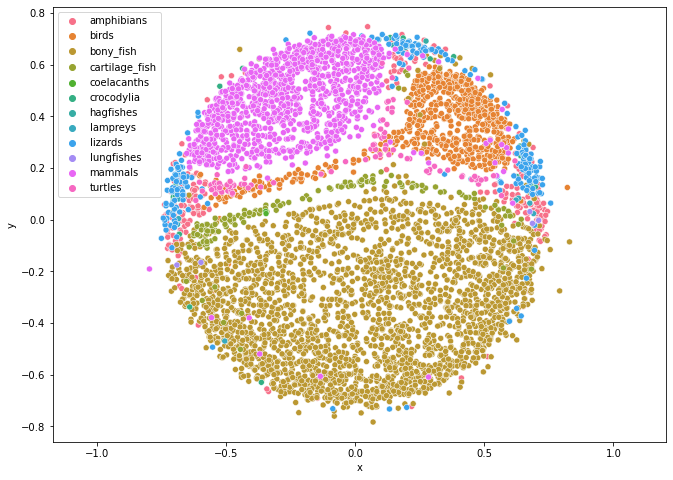

The plot above shows a molecular distance map of 4844 animals from various classes from the chordate phylum (meaning that all these animals have a vertebrate), coloured according to their class. The sequences were taken from NCBI Reference Sequence Database (RefSeq). We can see from this figure that this method (Map of Life) does quite a good job in sorting species into different categories. One particular aspect that we want to point out in this figure is where the points representing the lungfish are—they are in the area of where the bony fish, cartilage fish, and amphibians touch. This is truly remarkable as lung fish has qualities of all these classes.

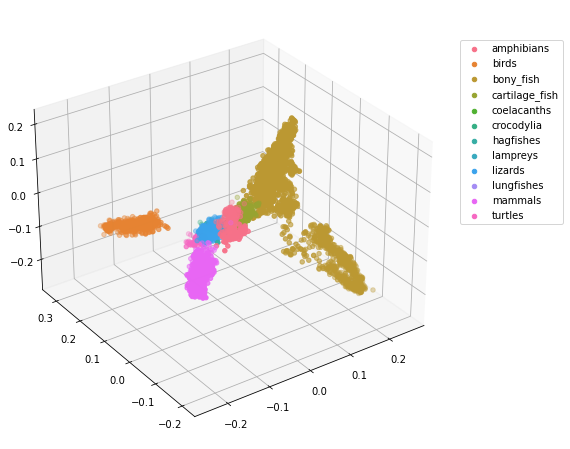

Since the points overlap one another, we could also plot the points in 3 dimensions.

# Since for 3D scatter plot, we need to plot each class of animals separately, we pre-assign colours to each animal class

animal_class_unique = cmdscaling_df['class'].unique()

cmap = sns.color_palette("husl", 12)

# %matplotlib notebook

fig = plt.figure(figsize=(11, 8))

ax = plt.axes(projection = '3d')

fig.add_axes(ax)

for i in range(len(animal_class_unique)):

data = cmdscaling_df.loc[cmdscaling_df['class'] == animal_class_unique[i]]

ax.scatter(data['x'], data['y'], data['z'], label=animal_class_unique[i], color=cmap[i])

ax.view_init(30, -125)

plt.legend(loc=(1.04,0.5))

plt.show()

If you are viewing this in jupyter notebook, uncomment the first line of the previous code block and rerun the code block. This will allow you to rotate the 3D plot above! (Before moving on to the following code blocks run the line %matplotlib inline again so that the following plots will render within the notebook.)

If you are curious as to how to use the sklearn.manifold.MDS() function, the code for multidimensional scaling for the 4844 vertebrates is given in the following code block. Warning: if you are not performing the following code block in parallel, it will take around half an hour to complete. Additionally, since our DSSIM variable is already a dissimilarity matrix, we set the parameter dissimilarity in our sklearn.manifold.MDS() class to precomputed. However, be aware that the dissimilarity matrix DSSIM is not Euclidean, and therefore, the results using the sklearn.maifold.MDS() class will differ from the results shown previously.

md_scaling = manifold.MDS(n_components=2, dissimilarity='precomputed', random_state=0, n_jobs=mp.cpu_count())

coord = md_scaling.fit_transform(DSSIM)

mdscaling_df = pd.DataFrame(data = coord, columns = ['x', 'y'])

mdscaling_df['class'] = animal_class

mdscaling_df.head()

| x | y | class | |

|---|---|---|---|

| 0 | -0.425241 | 0.569110 | amphibians |

| 1 | 0.712073 | 0.134961 | amphibians |

| 2 | -0.660362 | -0.025253 | amphibians |

| 3 | 0.703996 | 0.048820 | amphibians |

| 4 | -0.589360 | 0.044000 | amphibians |

fig = plt.figure()

fig.set_size_inches(11, 8)

ax = sns.scatterplot(x='x', y='y', hue='class', data=mdscaling_df)

legend = ax.legend()

plt.axis('equal')

plt.show()

Although the results from using the sklearn.manifold.MDS() class differs from the result from the cmdscale() function, we observe that this plot is still able to group the animals by class quite well.

CGR Activity 5

Create a molecular distance map of the mitochondrion DNA of molluscs. The Fasta files are provided to you and can be found in the Supplementary Material/CGR/Activities/Molluscs/Fasta Files folder. [What happened when we did this]

Protein Visualization#

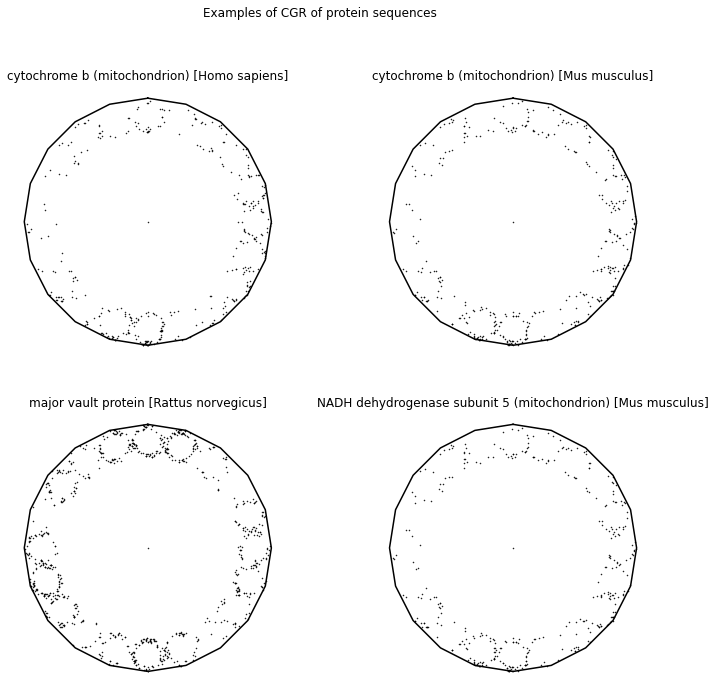

CGR has also been applied to visualizing and analyzing both the primary and secondary structures of proteins. The primary structure of a protein is simply an amino acid sequence. To analyze the primary structure of proteins, the authors from [Fiser et al., 1994] using an \(20\)-sided regular polygon, each representing an amino acid. Table 2 shows all 20 amino acids along with their 3-letter code, as well as their 1-letter code. To avoid the attractor from overlapping itself, we will use the dividing rate for a \(20\)-gon shown from [Almeida and Vinga, 2009], \(r = 0.863271\). Some CGRs for protein visualizations can be found in the following.

Name |

3 letter code |

1 letter code |

|---|---|---|

alanine |

ala |

A |

arginine |

arg |

R |

asparagine |

asn |

N |

aspartic acid |

asp |

D |

cysteine |

cys |

C |

glutamine |

gln |

Q |

glutamic acid |

glu |

E |

glycine |

gly |

G |

histidine |

his |

H |

isoleucine |

ile |

I |

leucine |

leu |

L |

lysine |

lys |

K |

methionine |

met |

M |

phenylalanine |

phe |

F |

proline |

pro |

P |

serine |

ser |

S |

threonine |

thre |

T |

tryptophan |

trp |

W |

tyrosine |

tyr |

Y |

valine |

val |

V |

def protein_cgr(seq):

N = len(seq)

AA = 'ARNDCQEGHILKMFPSTWYVBZ'

# B = Aspartic acid (D) or Asparagine (N)

# Z = Glutamic acid (E) or Glutamine (Q)

vertices = n_gon(20, 90)

r = dividingRateAlmeida(20)

dataPoints = np.zeros((2, N+1))

for i in range(1, N+1):

index = AA.index(seq[i-1])

if index == 20:

r = random.randint(0, 1)

if r == 0:

index = AA.index('D')

else:

index = AA.index('N')

elif index == 21:

r = random.randint(0, 1)

if r == 0:

index = AA.index('E')

else:

index = AA.index('Q')

dataPoints[:, i] = dataPoints[:, i-1] + (vertices[:, index] - dataPoints[:, i-1])*r

return(vertices, dataPoints)

fig, ((ax1, ax2), (ax3, ax4)) = plt.subplots(2, 2, figsize=(12, 11))

fig.suptitle('Examples of CGR of protein sequences')

human_cytochrome_b_seq = parse_sequence('../Supplementary Material/CGR/FASTA Files/ASY00349.fasta')

(coord, points) = protein_cgr(human_cytochrome_b_seq)

ax1.plot(coord[0, :], coord[1, :], 'k')

ax1.plot(points[0, :], points[1, :], 'k.', markersize=1)

ax1.axis('off')

ax1.set_aspect('equal', 'box')

ax1.set_title('cytochrome b (mitochondrion) [Homo sapiens]')

mouse_cytochrome_b_seq = parse_sequence('../Supplementary Material/CGR/FASTA Files/NP_904340.fasta')

(coord, points) = protein_cgr(mouse_cytochrome_b_seq)

ax2.plot(coord[0, :], coord[1, :], 'k')

ax2.plot(points[0, :], points[1, :], 'k.', markersize=1)

ax2.axis('off')

ax2.set_aspect('equal', 'box')

ax2.set_title('cytochrome b (mitochondrion) [Mus musculus]')

major_vault_protein_seq = parse_sequence('../Supplementary Material/CGR/FASTA Files/NP_073206.fasta')

(coord, points) = protein_cgr(major_vault_protein_seq)

ax3.plot(coord[0, :], coord[1, :], 'k')

ax3.plot(points[0, :], points[1, :], 'k.', markersize=1)

ax3.axis('off')

ax3.set_aspect('equal', 'box')

ax3.set_title('major vault protein [Rattus norvegicus]')

NADH_dehydrogenase_seq = parse_sequence('../Supplementary Material/CGR/FASTA Files/NP_904340.fasta')

(coord, points) = protein_cgr(NADH_dehydrogenase_seq)

ax4.plot(coord[0, :], coord[1, :], 'k')

ax4.plot(points[0, :], points[1, :], 'k.', markersize=1)

ax4.axis('off')

ax4.set_aspect('equal', 'box')

ax4.set_title('NADH dehydrogenase subunit 5 (mitochondrion) [Mus musculus]')

plt.show()

CGR Activity 6

Create a molecular distance map of the cytochrome b (mitochondrion) protein in all land plants. Fasta files are located in the Supplementary Material/CGR/Activities/Land Plants/Fasta Files folder. [What happened when we did this]

According to Basu et al. [Basu et al., 1997], there are two serious limitations for using a 20-vertex CGR to identify patterns. The first limitation the authors mentioned about using the 20-vertex is that it is hard to visualize different protein families since one would have to plot the sequences in different polygons rather than plotting a random sampling of proteins of different functions and origins in a single CGR [Fiser et al., 1994]. The second limitation is that the amino acid residues in different positions are often replaced by similar amino acids [Basu et al., 1997]. This means that the 20-vertex CGR cannot be used to differentiate between similar and dissimilar residues and the visualization could be different for proteins within the same family.

To fix the second limitation mentioned in [Basu et al., 1997], Fiser et al. [Fiser et al., 1994] introduced using CGR to study 3D structures of proteins. A chain of amino acid is what is called a polypeptide. Due to each amino acid having a specific structure, which contributes to the amino acid’s properties, such as hydrophobicity or hydrogen-bonding, depending on the sequence of the amino acid chain, the polypeptide chain will fold into its lowest energy configuration [Simon et al., 1991]. This is known as the secondary structure. The two most common structures that appear in this stage are the \(\alpha\)-helices and the \(\beta\)-sheets. Using the chaos game on the secondary structure can indicate any non-randomness of the structural elements in proteins. One way to achieve this is to divide the 20 amino acids into 4 groups based on different properties and assign each group to a corner. Some properties that have been considered for CGR include hydrophobicity, molecular weight, isoelectric point (pI), \(\alpha\) propensity, and \(\beta\) propensity. For details, see [Basu et al., 1997, Fiser et al., 1994].

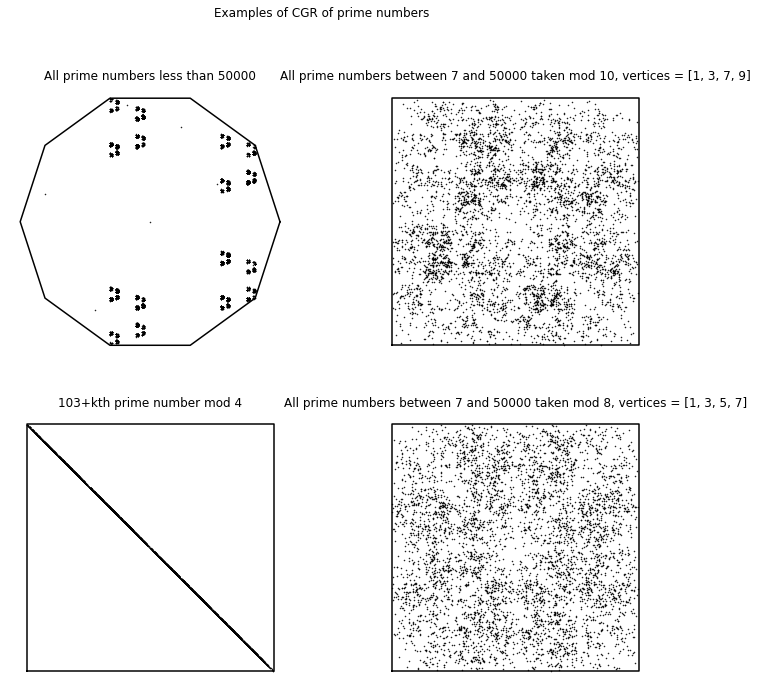

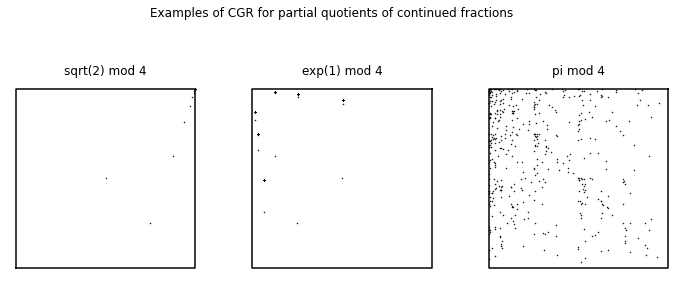

CGR of Mathematical Sequences#

In the first-year course we gave in 2015 [Chan and Corless, 2020], we applied the chaos game representation to mathematical sequences found on the Online Encyclopedia of Integer Sequences (OEIS) [Inc., 2019]. In the following, we show some CGR experiments that came from the first-year course. Some sequences that were used from the Online Encyclopedia of Integer Sequences (OEIS) included digits of \(\pi\) (A000796), Fibonacci Numbers (A000045), prime numbers (A000040), and some from the continued fractions section (which were introduced at the beginning of the course). We will not only look at the visualizations created in the first-year course, but we will also determine whether these experiments are considered to be good visual representations.

Digits of Pi#

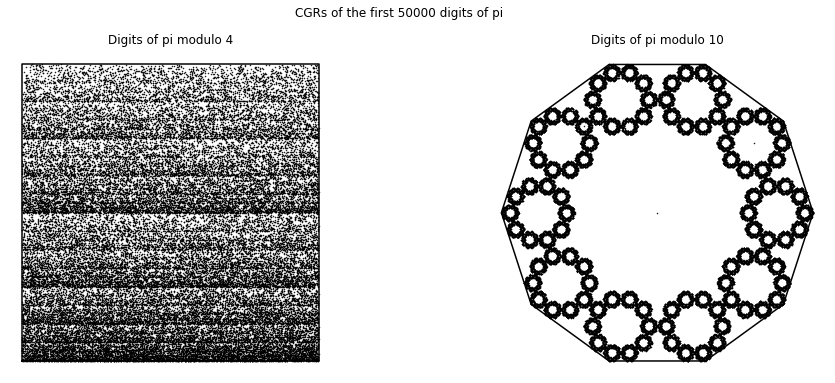

We first applied CGR to the digits of \(\pi\) (A000796 from the OEIS), since we believe that the sequence of the digits are random6. Since we focused on the 4-vertex CGR in the course, we naïvely took the digits of \(\pi\) modulo 4 and applied it to the 4-vertex CGR. The result of this is shown in the left figure below. Since we assumed that the digits of \(\pi\) are random, this means that the visualization should be similar to the square-shaped CGR of random integer sequences from Different Polygons. However, we can see that this is not the case; instead of a uniform covering of the space, we can see a pattern of vertical lines occurring. Quantitatively, the DSSIM index between the CGR of the digits of \(\pi\) modulo 4 and the CGR of random digits is \(0.9851\), which indicates that they are not similar. The reason for this is because when we take \(10\bmod{4}\), the values are distributed to the corners unevenly: our 0 and 1 vertices would have one extra value each, and thus why our CGR of the digits of \(\pi\) does not match the CGR of random integers even though we know for a fact that the digits of \(\pi\) are indeed random. Due to this reason, the left figure below is not a good visual representation of the digits of \(\pi\).

However, there are solutions to this problem. Through personal communication, Lila Kari suggested reading 2 digits of \(\pi\) at a time (i.e. the first few 2-digit number of \(\pi\) would be \(14\), \(15\), \(92\), etc.). As the smallest and largest possible number would be 0 and 99, respectively, this means that there are 100 possible combinations, and therefore 100 different numbers. Therefore, since 100 is divisible by 4, this means that we can group the numbers evenly into 4 groups, whether it is by taking the modulo (base 4) of the numbers, or dividing the numbers by the groups 0-24, 25-49, 50-74, and 75-99.

Another solution to this is to visualize the digits of \(\pi\) is shown in the right figure below. We use a 10-vertex CGR (\(r = 0.763932\)) where each vertex represents an integer from 0 to 9. From this figure, we can see that the points produce a fractal pattern of the decagon, which suggests that the sequence is random.

def pi_digits(n):

S = str(sympy.N(sympy.pi, n+1))

return(list(map(int, list(S[2:]))))

def numeric_cgr(seq, n_ver, *start):

N = len(seq)

if start:

vertices = n_gon(n_ver, start[0])

else:

vertices = n_gon(n_ver, 0)

r = dividingRateAlmeida(n_ver)

dataPoints = np.zeros((2, N+1))

for i in range(1, N+1):

index = seq[i-1]%n_ver

dataPoints[:, i] = dataPoints[:, i-1] + (vertices[:, index] - dataPoints[:, i-1])*r

return(vertices, dataPoints)

N = 50000

pi_seq = pi_digits(N)

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(16, 6))

fig.suptitle('CGRs of the first {} digits of pi'.format(N))

(coord, points) = numeric_cgr(pi_seq, 4, -135)

ax1.plot(coord[0, :], coord[1, :], 'k')

ax1.plot(points[0, :], points[1, :], 'k.', markersize=1)

ax1.axis('off')

ax1.set_aspect('equal', 'box')

ax1.set_title('Digits of pi modulo 4')

(coord, points) = numeric_cgr(pi_seq, 10)

ax2.plot(coord[0, :], coord[1, :], 'k')

ax2.plot(points[0, :], points[1, :], 'k.', markersize=1)

ax2.axis('off')

ax2.set_aspect('equal', 'box')

ax2.set_title('Digits of pi modulo 10')

plt.show()

CGR Activity 7

Create a square CGR for the digits of \(pi\) by reading 2 digits at a time (as explained above). Assign the two-digit numbers a corner by the following two methods:

a) taking the modulo (base 4) and assigning the corners 0, 1, 2, 3 [What happened when we did this]

b) assigning the corners to cover the values 0-24, 25-49, 50-74, and 75-99, respectively. [What happened when we did this]

c) Do the CGRs look different from one another? What conclusions can you draw from the two CGRs? [What happened when we did this]

CGR Activity 8

Repeat the above activity with the digits of \(e = 2.71828...\) [What happened when we did this]

Fibonacci Sequence#

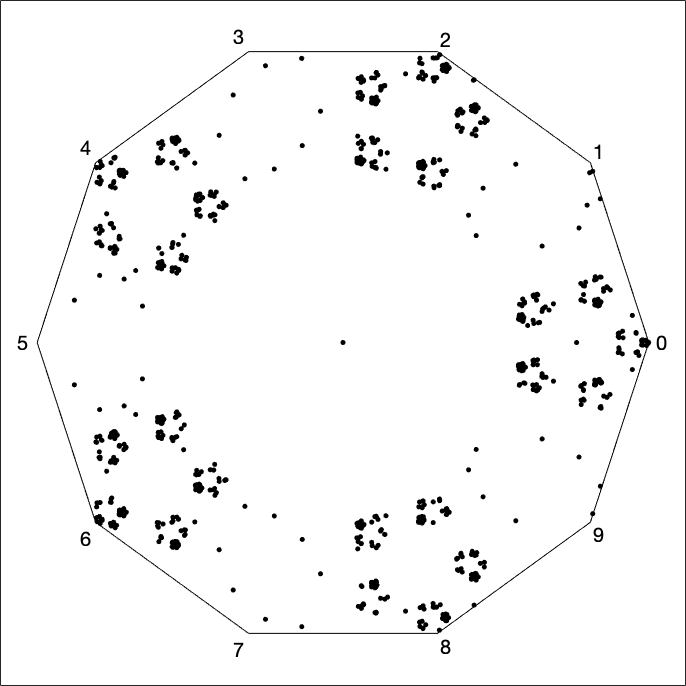

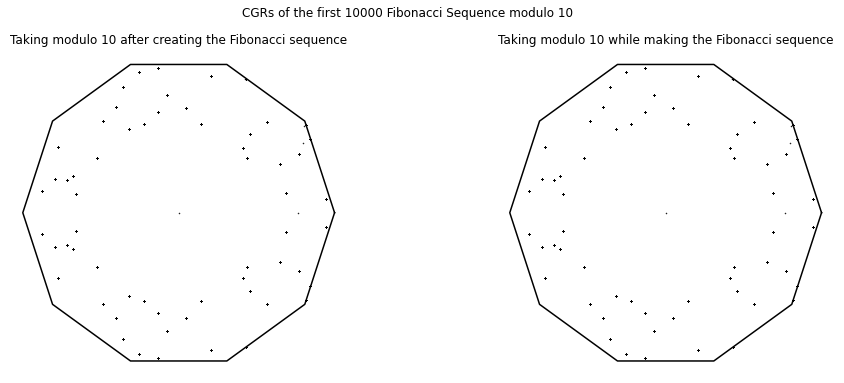

We also created a visualization of the Fibonacci Sequence (A000045 from the OEIS). One example of a visualization of this sequence is a 10-vertex CGR of the Fibonacci sequence modulo 10; that is, a sequence of the final digits of \(F_n\). It appears by the density of the even blocks in Fig. 12 that most (by far and away most) of the numbers of the Fibonacci sequence are even; this conclusion seems very strange, and deserves a closer look.

In fact the sequence is ultimately periodic (as of course it must be, because the set of decimal digits is finite), and indeed it has period \(60\): The first \(65\) entries are \(1, 1, 2, 3, 5, 8, 3, 1, \ldots, 9, 1, 0, 1, 1, 2, 3, 5\) and it repeats from there. Just by counting, \(40\) out of these \(60\) numbers are odd, and only \(20\) are even; so the conclusion that “almost all Fibonacci numbers are even” that we drew from Fig. 12 is completely wrong!

Fig. 12 CGR of the Fibonacci sequence calculated in floating-point arithmetic before taking the remainder mod 10 in Matlab#